Amazon recently pulled the plug on its experimental AI-powered recruitment engine when it was discovered that the machine learning technology behind it was exhibiting bias against female applicants. The company made it clear that the tool “was never used by Amazon recruiters to evaluate candidates” but, according to Reuters it had taught itself that male candidates were preferable, having observed patterns in résumés submitted to the company over a ten-year period, the majority of which came from men.

At first glance, it appears that the bias may have stemmed from the AI itself. But, when we talk of AI as intelligence demonstrated by machines, we often forget that the algorithms on which AI runs were designed and implement by humans. Likewise, the data used for training those algorithms is also very often created by humans. As a result, this training data can be tainted. It can reflect the personal biases of the humans that created it, for example, and these biases can subsequently be picked up by the learning algorithms.

When word embedding goes awry

Minimising AI bias relies on three factors; the quality of input data, prediction algorithm, and testing the correctness of the output prediction, and the quality of the training data set is one of the major contributors to the accuracy of the prediction output. As mentioned, one of the ways algorithmic bias can unwittingly occur is when the data used to train an AI model is tainted by factors such as existing human biases, incomplete knowledge, misrepresented data, or the ongoing interactions of users.

In the case of Amazon’s recruitment tool, word embedding, an algorithmic technique commonly used in Natural Language Processing (NLP) and Natural Language Understanding (NLU), may have been a key contributor to the gender bias. Word embedding represents each English word as a vector, allowing each word to be mapped as point in multidimensional space. Words with similar meaning will have similar vector representations, and will be clustered together in the vector space. The words he, him, male, and man¸ for example, will have similar representations, and will be clustered together in the vector representation, as will the words she, her, female and woman.

Word embedding also captures semantic relationships between words from the textual data, such as the relationships of countries and their capitals; France – Paris, Italy – Rome, United States of America – Washington DC, and so on. It’s here, however, that things may have gone slightly awry. If the recruitment data showed more female than male candidates being rejected, then it’s likely that the algorithm would have drawn up a close relationship between the words woman or female and reject. Likewise, the reverse is true, with a close relationship being drawn up between the words man or male and select.

It’s important, therefore, to avoid bias in word embedding, and the use of new algorithms such as Gender-Neutral Word Embedding will ensure that the information encoded in word embedding is independent of any gender influences.

AI ethics: Time to move beyond a list of principles

Eliminating bias and ensuring fairness

In time, AI bias will be eliminated altogether. AI is really no different to any other software, after all, and just as no organisation would ever put software live without any form of quality assurance, AI solutions must be tested to ensure they meet the requirements that were set for them. Indeed, most of the stories we hear about inaccurate AI predictions are due to the fact that the people responsible for implementing the technology didn’t understand the decision-making process, and attempted to solve real-world problems with ill-trained and inadequately tested algorithmic models.

Fortunately, many organisations already recognise that AI bias can occur, and are taking active remedial measures to avoid it. Microsoft’s FATE, for example, aims to address the need for transparency, accountability and fairness in AI and machine learning systems, while IBM’s AI Fairness 360, AIF360 is an open source toolkit of metrics that can be used to check for unwanted bias in datasets and machine learning models, accompanied by algorithms to mitigate such bias. Elsewhere, Google’s has a bias-detecting ‘What If’ tool in the web dashboard for its TensorFlow machine learning framework.

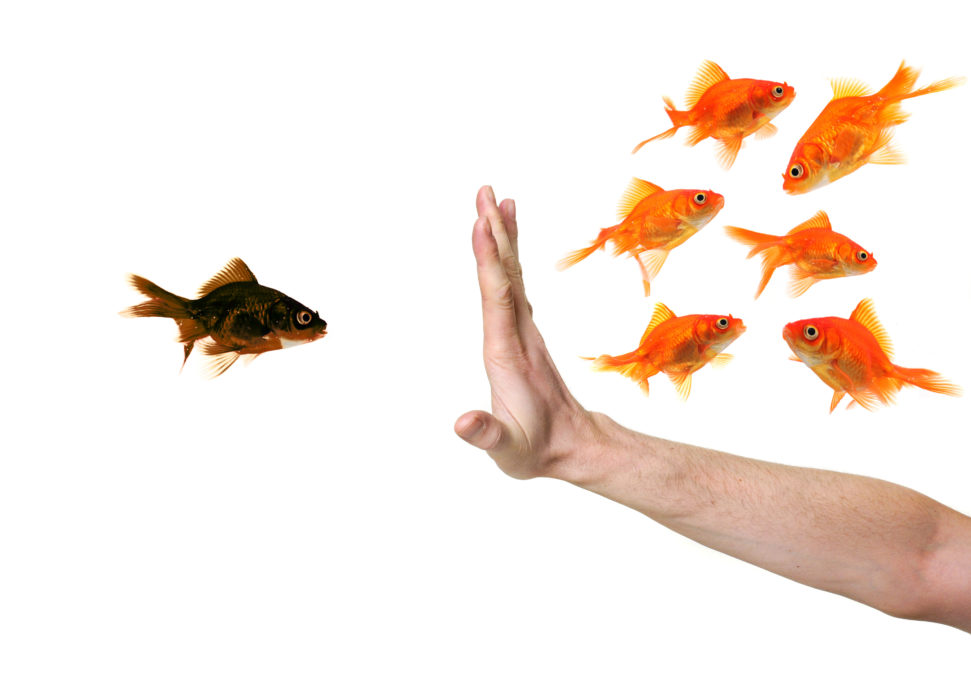

Both Microsoft and IBM mention fairness, and the distinction between fairness and discrimination is an important one. Discrimination is the action taken based on bias, whereas fairness is a lack of bias. Amazon’s AI-based recruitment system made a biased prediction which may have been unfair but, as per Amazon’s claim that the tool was not used to inform their decisions, there was no discrimination.

Algorithmic and human bias in hiring: Can either be mitigated?

Fact is, bias has been introduced into AI by humans. And while it’s doesn’t exist because someone made an explicit effort to create a biased system, but rather due to a lack of forward thinking toward ensuring that the system doesn’t make aberrant predictions, it is the responsibility of humans to ensure fairness in AI systems. Only then will tools such as Amazon’s recruitment engine be implemented successfully, with full trust and confidence in their prediction output.

Dinesh Singh is AVP, Technology at Aricent.