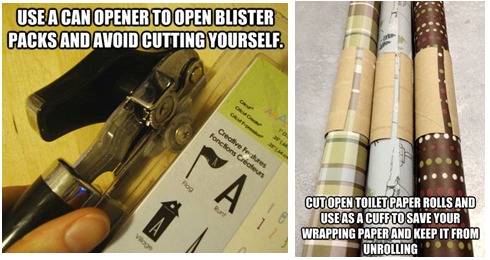

Every so often, my news feed features an article that offers “The Top 10 Life Hacks.” These are tips and tricks about how to use ordinary household products unexpected ways to improve your life— “…and tip number 7 will blow your mind!!!”

Admittedly, I’ve been suckered into opening this click bait. To be honest, there are times that I’m pleasantly surprised. For example, who knew that you could cut through annoying plastic blister packs with a can opener, or use a toilet paper roll to keep wrapping paper from unrolling?

I tried the two “hacks” above and guess what? They “kinda” worked…for a while. The can opener cut through the seam where the plastic was fused, but it failed to cut through the length of the packaging. The toilet roll held the wrapping paper for a while, but ultimately the carboard weakened and the wrapping paper unraveled. Not surprisingly, using scissors for the plastic blister packs and small piece of tape for the wrapping paper worked much, much better.

In a very similar vein, many organizations are now considering using RPA to automate software testing: a “tech hack” for software testing, of sorts. However, just as the toilet paper roll didn’t present a sustainable solution for keeping my wrapping paper from unrolling, RPA is not a sustainable solution for software test automation…and the modifications required to make the RPA tool sustainable for the task of software test automation would be, well, a hack.

If you already have an RPA tool in your organization and you’re looking to get started with test automation, your RPA tool might seem like a logical choice. It’s usually relatively easy to automate some fundamental test scenarios (e.g., create a new user and complete a transaction), add validation, and believe that you’re on the path to test automation.

However, it’s important to recognize that successful—and sustainable— test automation requires much more than the ability to click through application paths. To rise above the dismal industry average test automation rate of <20%, teams also must be able to construct and stabilise an effective automated test suite. RPA tools usually aren’t designed to enable this. As a result, you will hit test automation roadblocks such as delays waiting for the required test data and test environments, inconsistent results that erode trust in the automation initiative, and “bloated” test suites that consume considerable resources but don’t deliver clear, actionable feedback.

For a quick overview of the difference in scope between RPA tools and test automation tools, compare the following definitions from Gartner:

RPA tools “perform ‘if, then, else’ statements on structured data, typically using a combination of user interface (UI) interactions, or by connecting to APIs to drive client servers, mainframes or HTML code. An RPA tool operates by mapping a process in the RPA tool language for the software “robot” to follow, with runtime allocated to execute the script by a control dashboard.”

Test automation tools “enable an organization to design, develop, maintain, manage, execute and analyze automated functional tests … They provide breadth and depth of products and features across the software development life cycle (SDLC). This includes test design and development; test case maintenance and reuse; and test management, test data management, automated testing and integration, with a strong focus on support for continuous testing.”

The need for these additional testing capabilities becomes clear when you consider some of the core differences between:

• Automating sequences of tasks in production environments to successfully execute a clearly-defined path through a process so you can complete work faster, and

• Automating realistic business processes in test environments to see where an application fails so you can make informed decisions about whether an application is too risky to release

What do these differences mean for software testing?

• Automation must execute in a test environment that’s typically incomplete, evolving, and constrained

• Managing stateful, secure, compliant test data becomes a huge challenge

• Effective test case design is essential for success

• Failures need to provide insight on business risk

To put it into more concrete terms, let’s consider the example of testing an online travel service. Assume you want to check the functionality that allows a user to extend his prepaid hotel reservation. First, you’d need to decide how many tests are required to thoroughly exercise the application logic— and what data combinations each would need to use.

Then, you’d need to acquire and provision all the data required to set the application to the state where the test scenario can be executed. In this case, you need (at least) an existing user account with an existing prepaid reservation for some date in the future—and you could not use actual production data, due to privacy regulations like GDPR.

Next, you need a way to invoke the required range of responses from the connected hotel reservation system (a room is available/not available), the credit card (transaction approved/denied) etc.—but without actually reserving a room or charging a credit card.

You would need to automate the process, of course. This involves logging in, retrieving the existing reservation, indicating that you want to modify it, then specifying the length of the extension.

Once you got the complete process automated, you’d need to configure a number of validations at different checkpoints. Were the appropriate details sent to the hotel in the appropriate message format? Was the reservation updated in your user database? Was payment data properly sent to the credit card provider? Were any account credits applied? Did the user receive an appropriate message if the reservation could not be extended? What about if the credit card was denied? And if the credit card was denied, did your system revert to the original reservation length rather than add additional nights that were not actually paid for?

Now imagine that your company decided to add a $10 change fee for all prepaid reservations. Could you easily slip this new requirement into your existing automated tests—or would you have to substantially rework each and every test to accommodate this minor change?

Even this simple example exposes some of the many software testing complexities that RPA tools just aren’t designed to address. RPA tools are built to automate specific tasks within a sequence. Software test automation tools are designed to measure the resilience of a broader sequence of tasks. To put it bluntly: RPA tools are architected to make a process work. But for software testing, you need tools that help you determine how a process can possibly break.

Ineffective software test automation is notorious for delaying releases while consuming incredible amounts of resources. As CIOs invest more and more in digital transformation initiatives that improve the customer experience through faster software delivery, skimping on software testing is counterproductive. Choosing the right tool for the job will pay off significantly in terms accelerated delivery, reduced business risk, and more resources to dedicate to innovation.

Wayne Ariola, is the author of the Continuous Testing for IT leaders and a well-known keynote speaker in the DevOps and App Dev space.