The threat and opportunities created by artificial intelligence have long been documented. Stephen Hawking and Elon Musk – Tesla CEO – have warned of the potential threat to humankind, while Facebook CEO Mark Zuckerberg has said AI will lead society and humanity down a path of great possibility and innovation.

However, in views echoed by Hawking and Musk, 116 AI leaders and robotics experts have written a letter to the United Nations urging them to take note and prevent the rise of potentially ‘killer robots’.

Indeed, Musk was among those who signed the letter, which warned of “a third revolution in warfare” – the letter calls for a ban on the use of AI in weaponry. It argued that “lethal autonomous” technology is a “Pandora’s box”, waiting to open at any moment. They explained that time is of the essence – “we do not have long to act”.

>See also: AI: the greatest threat in human history?

“Once developed, they will permit armed conflict to be fought at a scale greater than ever, and at timescales faster than humans can comprehend,” the letter says.

“These can be weapons of terror, weapons that despots and terrorists use against innocent populations, and weapons hacked to behave in undesirable ways.”

“Once this Pandora’s box is opened, it will be hard to close.”

The experts in the letter are ultimately calling for a ban of “morally wrong” technology under the UN Convention on Certain Conventional Weapons (CCW).

Other notable technology leaders who have signed the letter include Mustafa Suleyman, Google’s DeepMind co-founder.

It should be noted, however, that this letter does condemn all forms of AI, automation or machine learning. It simply wants to bring the issue of AI and weaponry firmly into the public and political eye.

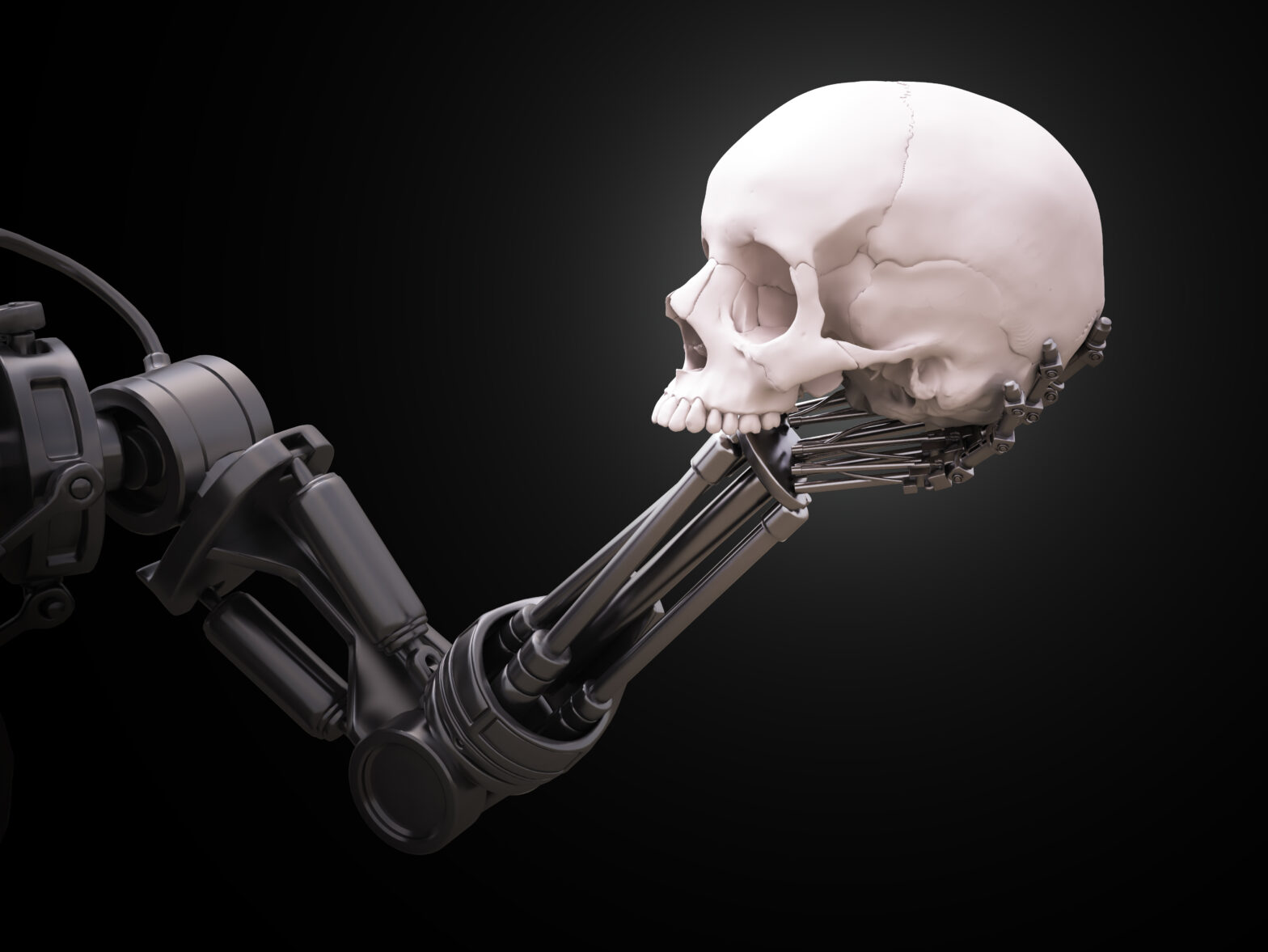

The ‘killer robot’

In the letter’s signatories eyes, a killer robots is a fully autonomous weapon that can select and engage targets freely, without human interaction. It should be noted that this technology does not yet exist, but is on the horizon.

Those who oppose the future use of this technology believe it is a threat to humanity, and should be banned.

>See also: Bursting the artificial intelligence hype

However, those in favour of killer robots suggest that this type of capability may be necessary in future conflicts. They propose a moratorium, instead of an outright ban.

In the UN sights

This letter is not news to the UN, and they are aware of the potential threat posed by AI in weaponry – a UN group on autonomous weaponry was scheduled for next Monday, although this has been postponed until November, according to the website. On top of this, a potential ban on the development of robot technology in weaponry has previously been discussed by UN committees.

Ethics and morality

In light of this letter from AI leaders warning about the development of ‘killer robots’, Ray Chohan, SVP, Corporate Strategy at PatSnap takes a look at what is being done in the innovation landscape to incorporate a system of ethics and morality into artificial intelligence.

“Artificial intelligence is an area that has had increasing publicity and investment ever since the 50s, although it only really started to become popular in the late 80s and 90s as organisations began to see the utility it could one day achieve. In the 2,000s, as computing power and storage that could support the technology increased exponentially, it started becoming mainstream, and patents applied for were granted at a much higher rate.”

>See also: AI: the possibilities and the threats posed

“Looking at the patent landscape over the past few years, the number of new inventions that specifically address the need for ethics-aware artificial intelligence are thin on the ground. One patent filed in May 2017 proposes a ‘Neuro-fuzzy system’, which can represent moral, ethical, legal, cultural, regional or management policies relevant to the context of a machine, with the ability to fine-tune the system based on individual/ corporate/ societal or cultural preference. The technology refers to Boolean algebra and fuzzy-logic. While it appears there are some people who are looking into this area, much more investment will be needed if real progress is to be made.”

“The company that has filed the most patents within the artificial intelligence/ machine learning patent family that include the terms ‘ethics’ or ‘morality’ is Natera, which is involved in genetic testing. This is clearly not about the physical dangers of AI, but likely more to do with Artificial Intelligence making use of extremely personal patient data. Looking at the wider Artificial Intelligence market, the clear leaders are the likes of IBM, Microsoft, Fujitsu and Hitachi. Nowhere to be seen in the top 15 organisations investing in this area are academic institutions or government agencies.”

>See also: The role of artificial intelligence in cyber security

“As the world’s largest organisations gather personal data, and apply machine learning and artificial intelligence to it to gain insight, it would appear where we are most at threat, at least for the foreseeable future, is how companies use our personal data. Government regulations can play a huge part in governing the decisions of companies’ R&D investment. For example, artificial intelligence patents that concerned ethics or morality saw a spike in 2014, a year in which the GDPR was being widely discussed in the EU, particularly around the ‘right to be forgotten.’ As regulation like the GDPR comes into play, we are likely to continue to see an increase in innovation in this area, as new technologies are dreamed up to better deal with personal data in an ethical manner.”