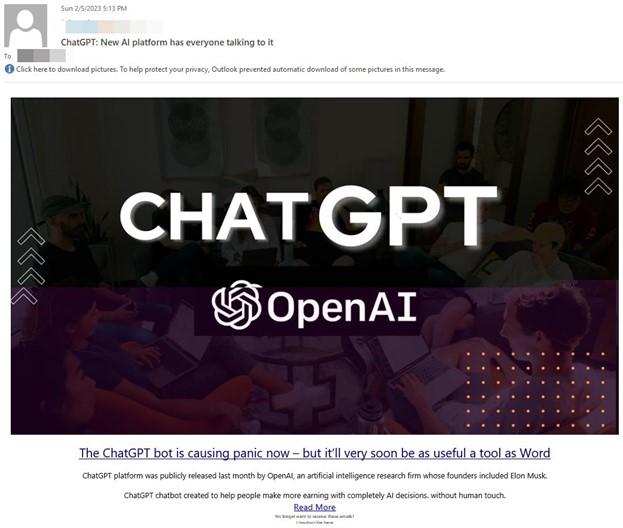

The phishing campaign, found to target users in Denmark, Germany, Australia, Ireland and the Netherlands, begins with a scam email containing a link to “ChatGPT”.

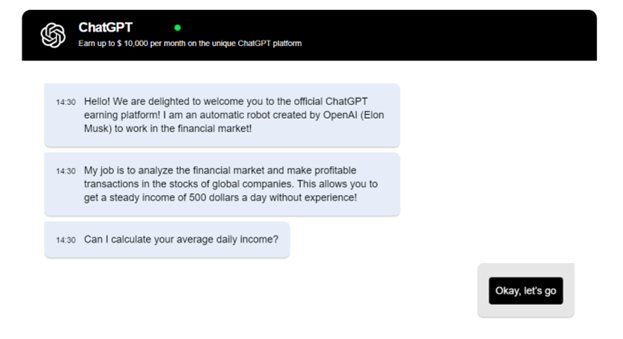

The link leads to a copycat version of the chatbot, luring users with financial opportunities that pay up to $10,000 per month “on the unique ChatGPT platform” — going beyond the fake apps that have recently appeared on Google and Apple app stores, offering users monthly or weekly subscriptions.

Scammers then recommended a minimum investment of €250, asking for bank card details as well as an email address, phone number and ID credentials.

The fake version of ChatGPT, which unlike the legitimate software only allowed a selection of pre-determined answers to each query, was accessible via an already blacklisted domain https://timegaea[.]com.

>See also: ChatGPT vs GDPR – what AI chatbots mean for data privacy

How the scam works

Once accessed, the counterfeit chatbot began with a short intro on its role in analysing financial markets, claiming to allow anyone to become a successful investor in global stocks, before asking users for permission to calculate their average daily income.

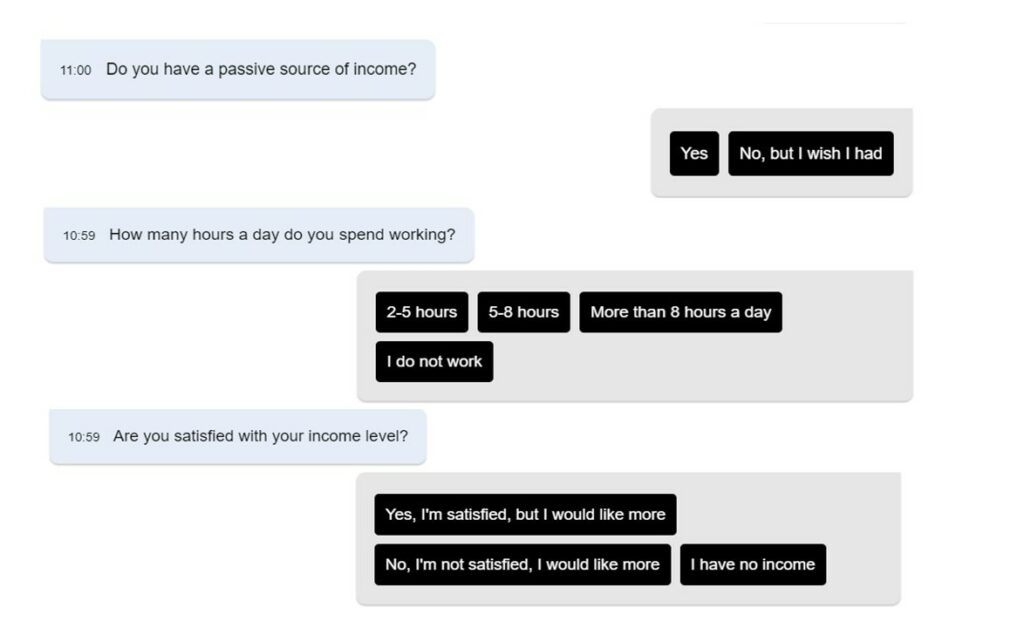

According to Bitdefender researchers who went along with the scheme, the software went on to ask about passive sources of income, how many hours per day are spent working, and whether the user is satisfied with their current income.

Then, it asked for an email address “for instant verification”, and a phone number to set up a WhatsApp account dedicated to said investment, after which the researchers received a call from a “representative” who spoke about investing in “crypto, oil, and international stock” in Romanian (Bitdefender is headquartered in Bucharest).

After asking for further details such as the income of family members, the call handler then alluded to a minimum starting investment sum of €250, before asking for the last six digits of a valid ID card, to which researchers asked instead for a link to the investment portal sent over email.

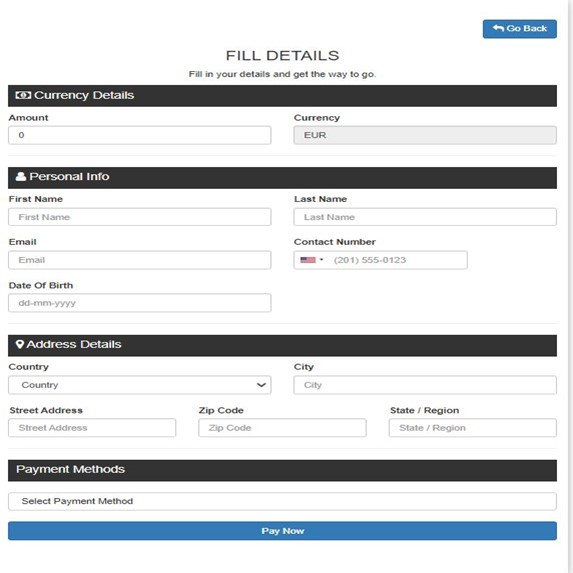

This led to a form containing fields for an array of personal details, for which the researchers inserted a made-up credit card number, after which the payment never appeared to go through.

The person on the other end of the phone also claimed to represent London-based company Import Capital, the domain of which has appeared as an alert from the Financial Conduct Authority (FCA) stating that the firm is not authorised to conduct business in the UK.

However, the payments form provided has no mention of Import Capital, ChatGPT or any receiving entity.

How to avoid exploitation

Researchers involved in the investigation have called for the public to be on alert, as the campaign appears to be growing globally.

To avoid falling victim to phishing schemes, a Bitdefender spokesperson advises: “Scammers using new viral internet tools or trends to defraud users is nothing new. If you’re looking to test out the official ChatGPT and its AI-powered text-generating abilities, do so only using the official website.

“Don’t follow links you receive via unsolicited correspondence and be especially wary of investment ploys delivered on behalf of the company, they are a scam.”

Related:

How to recognise common phishing attacks — Phishing attacks are mostly sent via email and prey on people’s trust and generosity. How can you know if you’re being scammed?

Data privacy: why consent does not equal compliance — Brands and publishers are unwittingly leaving themselves exposed to being fined billions of dollars for data privacy violations.