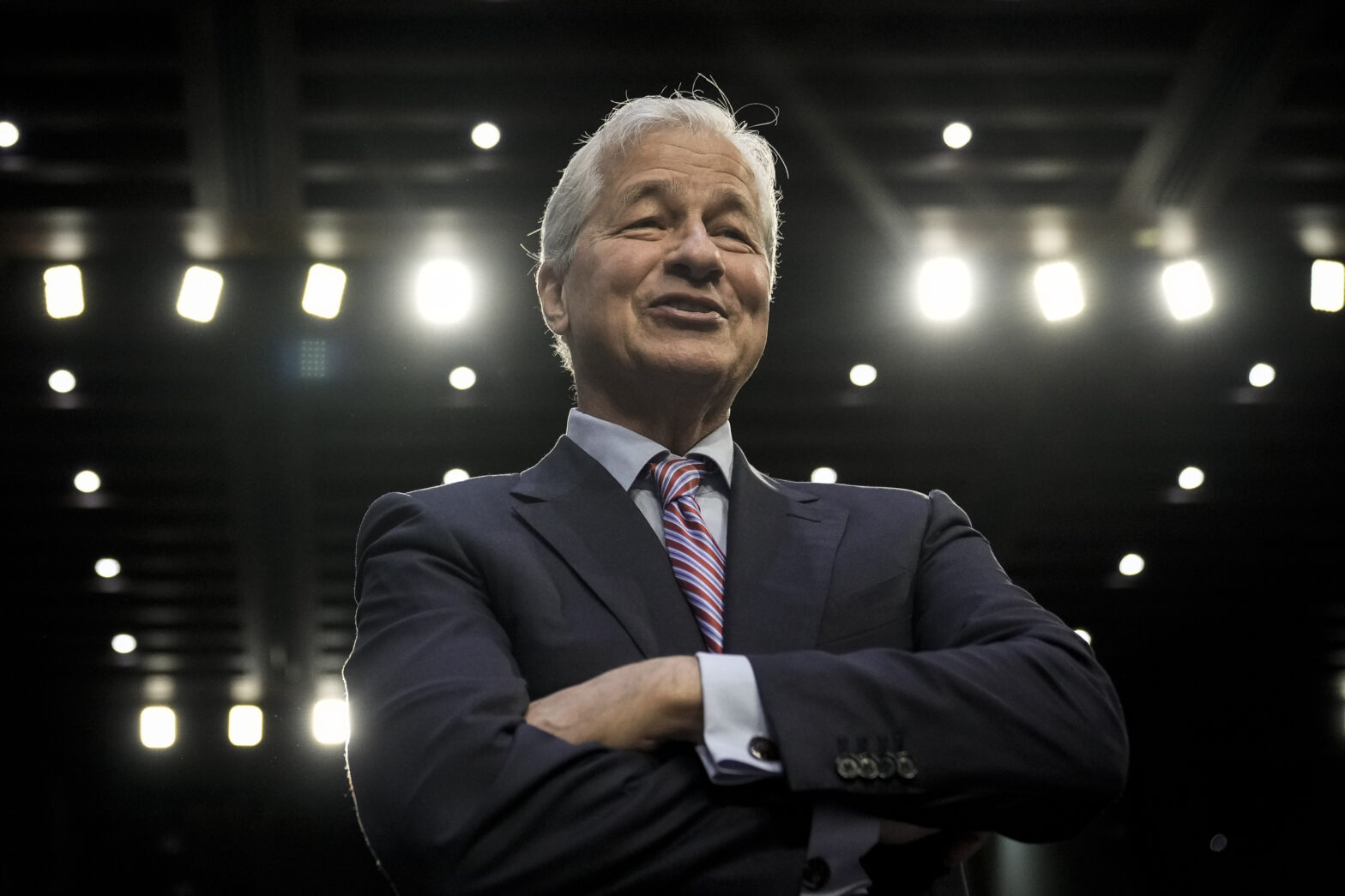

With AI capabilities poised to gradually automate manual, administrative workplace tasks at scale, Mr Dimon spoke with Bloomberg TV about the effects that the technology will have on future workers and business, reported The Telegraph.

As well as allowing people to live to 100, JP Morgan‘s chief executive said that legal boundaries will, in time, be put into place by regulators, as discussions globally continue around pending legislation and privacy guidelines.

ChatGPT vs GDPR – what AI chatbots mean for data privacy — While OpenAI’s ChatGPT is taking the large language model space by storm, there is much to consider when it comes to data privacy.

“People have to take a deep breath… your children are going to live to 100 and not have cancer because of technology. And literally they’ll probably be working three-and-a-half days a week,” said Mr Dimon.

“Eventually we’ll have legal guardrails around it. It’s hard to do because it’s new, but it will add huge value.”

Since the public launch of OpenAI‘s ChatGPT in November 2022, generative AI tools have been widely used to summarise internal conversations over email and apps, as well as generate project presentations at speed.

While Mr Dimon admitted that some job roles will be replaced, following Goldman Sachs‘ prediction earlier this year of 300 million full-time jobs becoming fully automated, he added: “It’s a living breathing thing… for us, every single process…every app and every database, you can apply AI.

“It might be used as a copilot. It might be used to replace humans.”

Regulation discussions across UK and EU

Mr Dimon’s interview with Bloomberg TV comes as the UK gears up for hosting the first ever AI Safety Summit at Bletchley Park, as well as during ongoing regulation discussions in the EU.

The safety summit, which is set to welcome governments, tech companies and academics from around the world, comes as UK Prime Minister Rishi Sunak lobbies to position Britain as a global AI leader.

Meanwhile, European Commission vice-president for values and transparency Věra Jourová has warned against possible “paranoia” or excessive restrictions, when it comes to putting regulation of AI innovation in place across the bloc, reported the FT.

‘Solid analysis’ has been called for by the EU senior official, as the Commission, European Parliament and member states embark on final negotiations to finalise the AI Act.

“We should not mark as high risk things which do not seem to be high risk at the moment,” the official told the FT.

“There should be a dynamic process where, when we see technologies being used in a risky way we are able to add them to the list of high risk later on.”

So far, regulation of risks to user safety, as well as legal obligations on foundation model vendors — including declaration of AI-generated content copyrighted data — have remained high on the legislation discussion agenda.

Related:

Why big tech shouldn’t dictate AI regulation — With big tech having their say on how artificial intelligence should be monitored, Jaeger Glucina discusses why we need to widen the AI regulation discussion.