Companies are increasing their spend on security to help them spot attacks on their IT infrastructures and websites.

The SANS Institute estimates that IT budgets are shifting towards security, with between 7 and 9% of all spend going towards IT security in 2016 compared to between 4 and 6% in 2015.

As part of this spend, approaches like machine learning and analytics are being deployed to automate the process for detecting attacks.

By looking for identifying data points and using information theory to create groups with similar profiles, it’s possible to predict potential attacks and manage them more effectively.

What should you be looking for?

Attacks on websites are often carried out using automated software packages, otherwise known as bots.

Bots can be simple and carry out one task repeatedly or they can be more complex to impersonate human behaviour.

As webmasters get wiser to bot attacks, the bots have to become more sophisticated.

Attacks by bots tend to be based on monetary gain – for instance, running through username credentials and password combinations to hijack a legitimate account or click fraud on adverts.

>See also: Bots, brands and why we need to start trusting AI

Basic steps like limiting the number of times a computer associated with one IP address can attempt to log in to an account can stop simple attacks.

However, advanced bots can cycle through thousands of IP addresses and mask their activities to avoid detection.

So, how can companies detect these more advanced attacks? One approach is to apply machine learning to ‘fingerprint’ a machine and then apply rules based on that set of defining characteristics.

In this example, this article will examine the information theory around the limits of data compression and data transmission that can be applied as part of a machine learning implementation.

More specifically, this research project analysed the fonts installed across 1.2 million PCs to see what could be determined about those machines and how they could be identified as part of groups that are more or less risky.

Working with a sample set of customers, a JavaScript request was triggered alongside a HTTP request from a device. This JavaScript request inspected 495 fonts on the requester devices, capturing data on whether the fonts were installed.

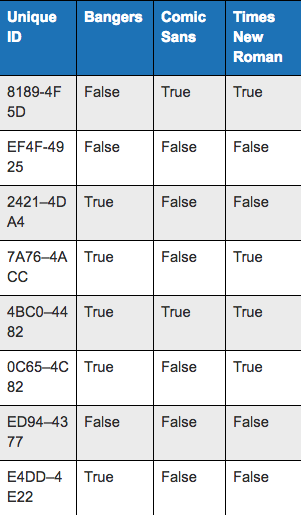

The ability to identify what group a machine might belong to is based on matching what fonts are installed, alongside other information such as the browser that machines are identified as using. As an example, looking for the presence of three fonts can help us make a comparison between machines. (See table #1 for an example.)

Using the presence of these fonts and the browser used as data points, it’s possible to allocate each machine into groups.

From this, the presence of specific fonts can be used to determine and then predict other things.

In Distil Networks’ initial research project, the presence of certain fonts was used to judge the likelihood that other fonts would or would not be installed.

This can be taken further using a mathematical function called Shannon entropy, which measures how many bits of data would be required to optimally encode a data set.

This can also be interpreted as the number of bits of information in data. Shannon entropy also maintains the property that information only adds when the components contain independent information.

>See also: Hacker profiling: who is attacking me?

Using calculations based on the presence of certain things, like fonts, it’s possible to determine the entropy of the data involved.

In this case, entropy describes how it is possible to see how many bits of information are required to spot each of the groups that can be found. This concept of entropy becomes especially powerful when we can compute the entropy of an arbitrary number of font columns.

Better still, we can compare entropy values computed with different number of columns to one another.

Based on this comparison, we were then able to spot the fonts with the most identifying characteristics from the overall group. From our initial sampling of 495 fonts, we were able to reduce this down to 50.

These identifiers presented the most information on groups and provided statistically relevant information on other criteria.

What can you do with this information?

You might ask what looking at fonts can tell you.

In the experiment, Distil collected information from about the 495 fonts on about 1.2 million devices.

The maximum possible entropy of 1.2 million data points is 2(1.2106)20; in other words, it would require about 20 optimally designed bits/fonts to identify these clients. Sadly, fonts aren’t installed on machines to be an optimally encoded identifier, and the entropy of our dataset is about 7.7.

By looking at this data, it was possible to crunch through and make predictions about potential device status.

This use of data provides a good example of how criteria be used to spot patterns in behaviour or identifying criteria over time by looking for cohort groups or similar patterns.

For example, if machines with Times New Roman and Comic Sans, but without Arial, look similar in their behaviour when the browser used is Google Chrome then that behaviour can be analysed to see if it is realistic.

Other factors like location of IP Address can also be looked at too. This cohort may represent a certain set of human users interacting with a site in a specific way, or it may demonstrate automated behaviour by software-driven bots.

Based on this analysis, it’s possible to spot patterns where suspect behaviour comes up.

>See also: Cultivating a culture of information security

This “fingerprint” can then be used for decisions around security responses. Depending on the response involved, the response can either challenge that user in the midst of their behaviour or prevent a bot attack from working.

By using information from the whole machine or device environment, it can be easier to spot where criminal behaviour is taking place. Sharing this information can make it easier for everyone; if one company spots a new bot attack pattern, than others can benefit from this new threat intelligence as well.

However, this is not as simple as certain activities being identified as “good” and blocking the rest.

Instead, a spectrum of responses can be designed and used as appropriate so that false positives are avoided or accounted for.

For example, forcing a Captcha image on web pages can be aggravating to a real world user but this can be better than not serving pages at all without warning.

For the bots involved, the pattern of behaviour can be stopped so that attacks or fraudulent activity can be prevented.

What’s next for security?

This area of security is not standing still.

The response from the malware industry is to develop smarter ways to avoid detection too. Just as security teams work to spot potential attacks, so malware writers and bot creators are developing their own approaches.

>See also: The rise of the machine: AI, the future of security

For security professionals, this arms race represents a continuous treadmill of attack and defence.

However, the use of data and machine learning provides defenders with more insight into what challenges they face.

Overall, the role for machine learning and automation is to make it easier to respond to potential attacks.

By creating new fingerprints for activities behind individual attacks, machine learning should make it possible to block whole families of bots before they threaten productivity or profitability.

Sourced by Stephen Singam, managing director – security research and Tim Hopper, data scientist at Distil Networks